This week’s readings focused on how surveillance is embedded in our culture and day-to-day practices, relying on critical scholarship to draw connections between automation and multiple dimensions of inequity. I really enjoyed both pieces from JITP, especially the one by folks at CUNY. Given the many identities co-existing in CUNY, it was interesting to see how these concerns surfaced in different ways for different people. I’ve been thinking about tech’s role in surveillance pedagogy since prior to the pandemic; since the onset, I’ve been seeing very questionable partnerships and collaborations between media entities and higher education institutions crop up all over. Using the introduction from the Caines & Silverman (2021) piece as an example, one of the university campuses I work at invested in Perusal, a proctoring tool of sorts, which enacts detailed surveillance of students as they engage with *any* course content, providing the instructor with metadata on their interactions (such as time spent on a reading). Another more widespread example of surveillance would be looking at how entities like Google partnered with schools across the country to distribute Chromebooks (often with access to Google Classroom and other educational tools) to students needing help attending virtually while schools were still in crisis mode. While, sure, noble, I guess, now all of those students are being subjected to tracking without knowing their data is part of the bargain for the school’s access to the tech. Media companies are now huge players informing educational policy, which is not a positive thing.

Also, these articles brushed shoulders with the action that Jay Dolmage (2018) defines as “diffused surveillance.” Dolmage (2018) writes about eugenics and how deeply embedded it is in our history, using the immigration process at Ellis Island around the year 1900 as a focal point. There is far too much to write to explain the entire process, but in short, workers at Ellis Island were provided with a guide to identifying the forty-something “criminal types,” which were just based on physical characteristics, of course. This handbook went on to have a more public release because of the off chance that someone who was not uber white (and I say “uber” only because many skin tones regarded as white today were still rejected at this time) was admitted, with the idea being their surveillance was now diffused into their community, their neighbors, their schools. It became the responsibility of those around them to keep order in check. Later on in the 20th century, teachers were provided with a guidebook to identify racial markers in students in the case that their families chose not to disclose their racial identity. On top of that, we know that grading is based on the standards associated with the standards for straight white men (Gallagher, 1999), so the students being surveyed were already beginning from a deficit.

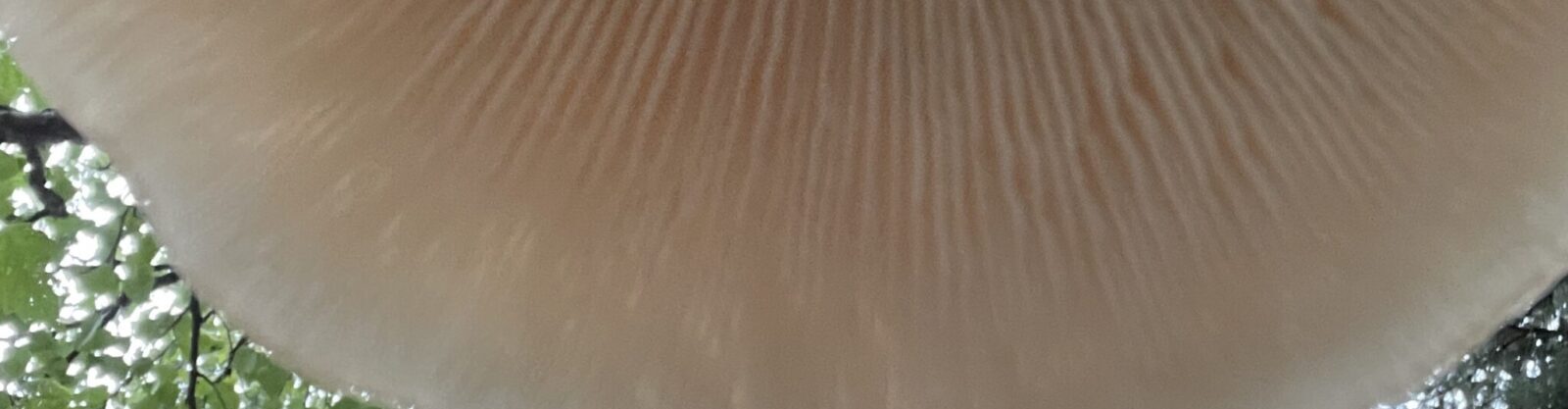

A quick final comment to touch on the AI conversation…I tried ChatGPT back in December before it blew up in the media— I was building an app for a class and input a request for it to “code me an application using Python that did XYZ and used this dataset: *link*.” What it spit back out was profoundly close to perfect code, and with minor tweaks from human intervention, it worked. The art discourse is interesting, given the art community and recent debates around NFTs, but I think folks who are concerned with it in the visual space need to understand it in the same way those who fear it in the writing space do. Understanding the technology and how to identify the discrepancies between human and computer output is a form of digital literacy and should be a teaching tool. For example, AI art struggles with consistency and minor distortions, in addition to not being able to draw hands for some reason. As far as whether or not it’s fair to develop an AI to create art and then enter an art competition, I’ll just stay out of that conversation, haha.

This is so interesting, Anthony. I’ll be curious to hear more about the coding application you generated through chatGPT. And your surveillance questions are spot-on, especially in considering the actions that look like benevolence but in fact have a potentially monetizable outcome that is hidden from view.

Anthony,

This initial comment is a reflection on my tendency to pay attention to voice in writings, and your voice is prevalent in this post! Really enjoyed the laugh at the end.

Secondly, I might have missed it, but could you share Jay Dolmage (2018) text or title. And, finally, on the note about AI not able to draw hands–hands are the most difficult to draw according to artists and instructors.

I feel very strongly about personality and voice in writing, so I appreciate that haha. Dolmage’s full book title is “Disabled Upon Arrival: Eugenics, Immigration, and the Construction of Race and Disability,” I’ll link it below! ?

https://ohiostatepress.org/books/titles/9780814213629.html